I'm usually a bit suspicious of sentiment analysis, which is the Natural Language Processing task of scoring texts according to how "positive" or "negative" they are. This is difficult largely because the true "sentiment" of a text is really not well-defined in the first place.

However, on a larger scale the vagueness of "sentiment" can in some sense average out, causing measured sentiment to carry a meaningful signal when aggregated (which is how it's usually used in practice). To investigate this, I did some experiments on comments from the latest Ludum Dare game jam.

Ludum Datum

If you're not already familiar with Ludum Dare, it's an event in which participants make a video game in 48 or 72 hours and then play, rate, and comment on each others' games.

Ludum Dare comments are essentially short reviews, left by users who also submit anonymous numerical rankings. This is basically the canonical setting where sentiment analysis is supposed to be meaningful, so it seemed like a great setting for investigating how useful sentiment analysis really is.

I downloaded all comments for Ludum Dare 48 via the ldjam.com api, and measured their sentiment using VADER, a popular sentiment analysis model targeting social media.

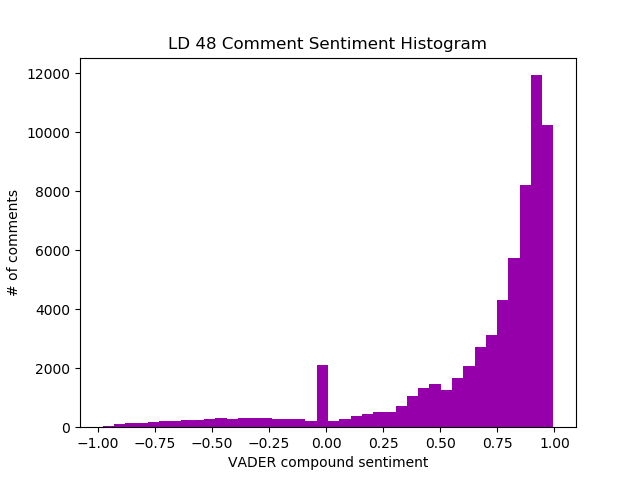

If you've ever participated in Ludum Dare or a similar event, you probably won't be surprised that the vast majority of comments are positive:

The average sentiment is 0.668 (where 1 is most positive, -1 is most negative, and 0 is neutral), and only 7.5% of comments are negative (sentiment < 0). The sentiment is slightly lower among anonymous comments, but even then the average sentiment is 0.336 and only 21.4% of anonymous comments are negative. Moreover, there are hardly any anonymous comments (only 112 out of 82973 comments, or about 0.13%, are anonymous).

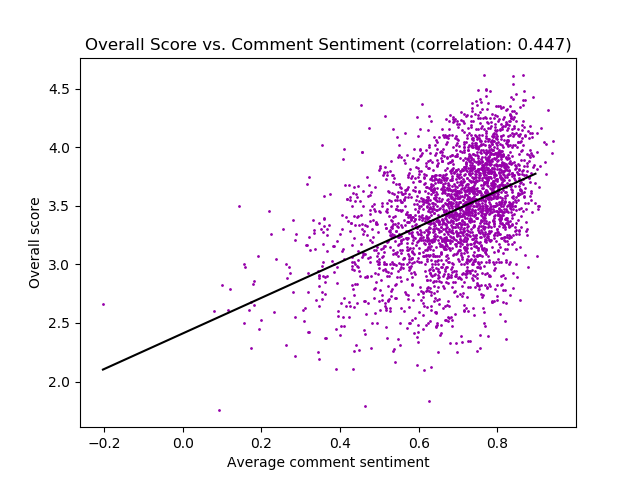

So, given that comments are overwhelmingly positive, is sentiment (as measured by VADER) a meaningful measurement? Well, it does seem that the average sentiment of comments on a game is correlated with the game's final score:

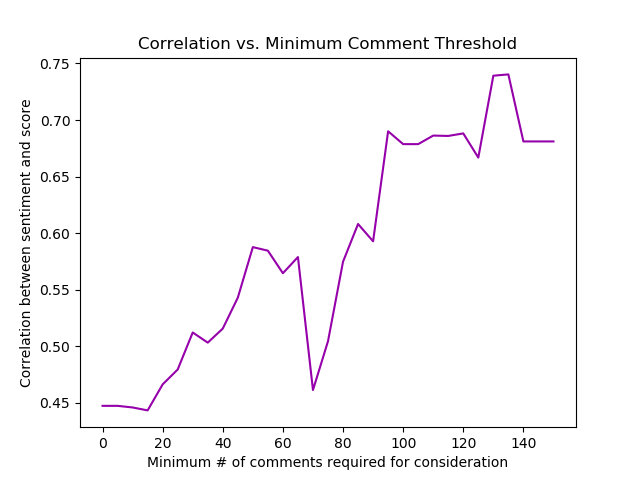

So there is a signal here, but it's not particularly strong. However, if we restrict our analysis to games with a larger number of comments, the correlation becomes a lot higher:

This is consistent with the intuition that on a larger scale the noise and uncertainty in individual sentiment scores "averages out" a bit (presumably related to the Law of Large Numbers).

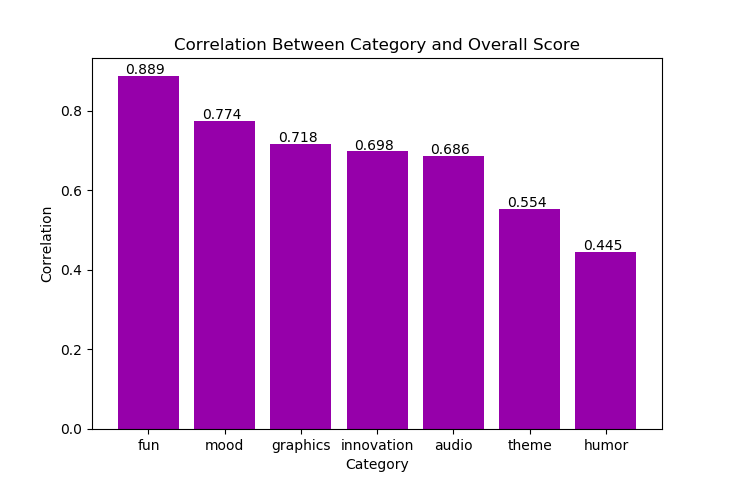

For comparison, here are the correlations between the overall score and various category scores (measured over all events since LD38):

So if you have a very large number of comments, VADER sentiment can be about as relevant to the overall score as people's actual numerical reviews in categories like Audio, Innovation, and Graphics. But for the vast majority of games (i.e. about 90% have less than 40 ratings) the signal is about as meaningful as the Humour score, which is the least important of all category scores.

But what happens if we look at the individual "most positive" or "most negative" comments?

Well, it turns out that most of them don't seem particularly positive or negative, but they tend to be particularly long. I think this is because VADER is designed for short (tweet-length) texts, and doesn't do so well on longer stuff.

If we only look at tweet-sized (max 140-characters) texts, then the 5 "most negative" comments are:

Had fun trying to get to the bottom! Wasn't easy, but I enjoyed trying desperately to avoid the evil pink bubbles

I think its good, but to punishing, I had a lot of iron and gold, but no gems :/ Died from a bat

Almost no gameplay but tons of text . I am lying bad in front of boss. Perhaps, it is bad ending. I am not sure if there is any good ending.

the game is good... but i hate the tv... to hell with ads

I just couldn't get past the third screen :( The enemies are very hard to kill!

Three of these seem to me to be explicitly positive reviews, although they show negative sentiment towards individual aspects of the game. This supports my impression that while sentiment analysis may be useful in the aggregate, it's of limited usefulness in the case of individual comments.

Philosophical Problems

It seems that sentiment analysis can be useful in the aggregate but isn't always that great in individual instances. The big question, then, is whether this is just a technological problem to be solved, or whether trying to measure "sentiment" on the individual level isn't well-defined in the first place. I lean towards the latter.

As with anything involving emotion, different people express "positive" and "negative" sentiment in different ways. One person's "great" is another's "pretty good." If you dig deeper into this, you run into the problems of "private language" discussed in Wittgenstein's Philosophical Investigations.

But even ignoring that, there are lots of other uncertainties regarding the "goal" of sentiment analysis. Are we interested in the emotion being experienced by the writer, or only the emotion that they deliberately convey? Or perhaps we care about the emotion intended to be produced in the reader? And what should we do about statements like "this is the epitome of capitalism," which can convey different value judgements depending on your worldview?

Even within the context of product reviews associated with numerical scores, there are plenty of grey areas that undermine the idea of a meaningful "ground truth". Some users might rate a "so bad it's good" movie poorly, while others who felt more-or-less the same about the movie might rate it highly due to differing opinions about how to review movies. A user could rate a tragedy or horror movie highly while emphasizing the "negative" emotions (sadness, terror, etc.) that it made them feel. And there are countless other complications. So a model that predicts whether or not a product review was associated with a positive or negative score is doing just that: predicting review scores, not "sentiment" in a meaningful sense.

There are, of course, some complications that are no problem for a human but still difficult for a machine, including sarcasm detection and per-entity sentiment analysis (i.e. the "game = good but tv = bad" problem from our examples). These make sentiment analysis an interesting technological problem. But while ongoing work makes machines more capable of overcoming these hurdles, it seems to me that mere technological progress will never really "solve" the fundamental problems with "sentiment analysis" as a singular goal.

So how do we square the fact that "sentiment" is poorly defined with the fact that it seems to be meaningful when aggregated? I think the correct interpretation of sentiment scores is that they represent a noisy approximation of some sort of latent variable that is correlated with more well-defined things that we might be interested in (e.g. review scores, voting intentions, brand loyalty). Maybe you could argue that it does makes sense to refer to this latent variable as "sentiment" after all.

In conclusion, I think sentiment analysis can have its uses for identifying trends in large amounts of text data, but I'm skeptical about reading much into it on the individual level.